lossmaps: a Python package to analyse and plot lossmaps

Overview

The lossmaps tool provides an easy and consistent way to analyse and plot loss maps, both simulated and measured. It integrates nicely with NXCALS and SWAN to extract measured BLM data, but can be ran locally as well (to plot simulated loss maps, as NXCALS integration can only be done via SWAN).

Installation

The package is not (yet) available on PyPi, but is hosted on the collimation EOS web space. To install locally, just run

pip install /eos/project/c/collimation-team/software/lossmaps

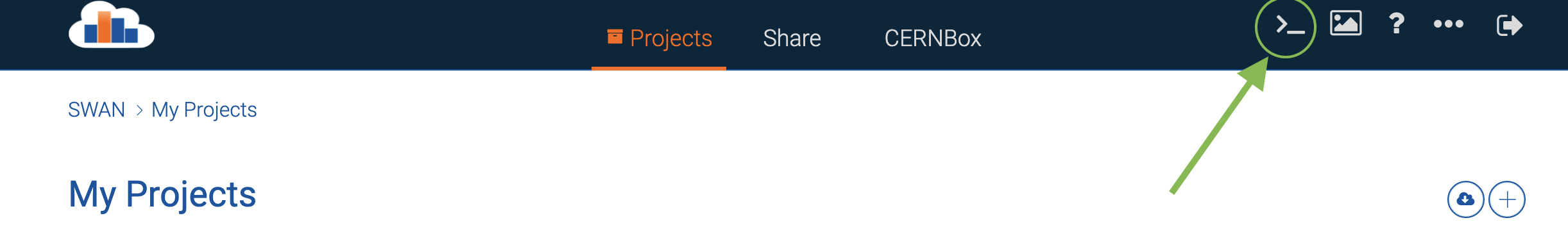

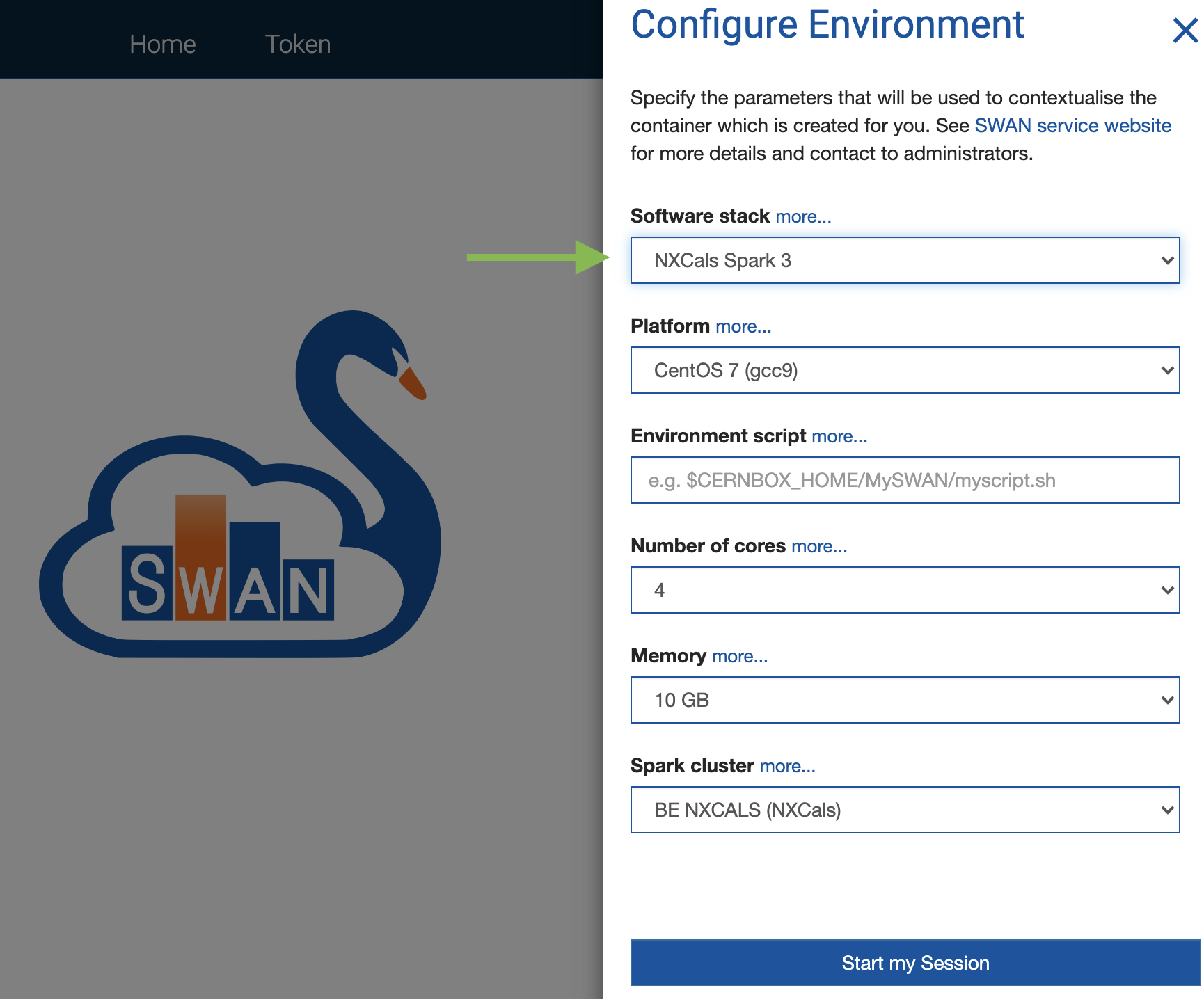

in your local terminal to add the package to your Python environment. This won't work on SWAN however, because SWAN doesn't allow you to install packages in the main environment. Instead, after connecting to SWAN, open a terminal:

and install the package in user mode:

pip install --user --editable /eos/project/c/collimation-team/software/lossmaps

This is sufficient to be able to use the package locally. If however you want to be able to create a new measured loss map by retrieving the BLM data from NXCALS, a few extra steps are needed. First, you need to ask acces to NXCALS via this link, choosing the CMW system and PRO environment. Furthermore, whenever connecting to SWAN, it has to be loaded with the correct software stack and Spark needs to be activated (see below).

Quick examples

A quick example to plot a loss map from simulated data:

import pathlib

import lossmaps as lm

ThisLM = lm.SimulatedLossMap(lmtype=lm.LMType.B1H, machine=lm.Machine.LHC)

path = pathlib.Path('./RunIII_2022_beta30cm')

posfile = path / 'MADX' / 'all_optics_B1.tfs'

aperfiles = list(path.glob('Results/2022_b30_K2_B1H_s*/aperture_losses.dat'))

collfiles = list(path.glob('Results/2022_b30_K2_B1H_s*/coll_summary.dat'))

ThisLM.load_data_SixTrack_K2(

aperture_losses=aperfiles,

coll_summary=collfiles,

positions=posfile,

cycle_aperture_from='tcp.c6l7.b1'

)

lm.plot_lossmap(ThisLM)

And a quick exanple to plot a loss map from measured data:

import pathlib

import lossmaps as lm

ThisLM = lm.MeasuredLossMap(timestamp='2021-10-26 21:45:05', lmtype=lm.LMType.B1H)

ThisLM.energy = 450

ThisLM.betastar = 1100

ThisLM.machine=lm.Machine.LHC

ThisLM.load_data_nxcals(spark)

lm.plot_lossmap(ThisLM)

where spark is the variable initialised after connecting to Spark (see below).

Constructing a SimulatedLossMap from SixTrack (K2 and FLUKA)

To create a loss map from simulated data, we first create a SimulatedLossMap object to hold the data:

import lossmaps as lm

ThisLM = lm.SimulatedLossMap(lmtype=lm.LMType.B1H, machine=lm.Machine.LHC)

There is only one variable that is required at the initialisation of the SimulatedLossMap object, which is lmtype. It expects an LMType (which is an Enum class, see below). Furthermore, it is useful (but not required at this stage) to set the variable machine as well, as it sets the warm regions and plot regions internally.

Importing data from K2 simulations

Next we want to load the data. For K2, it expects the aperture_losses and coll_summary files, and a twiss file containing (at least) the positions of the collimators:

import pathlib

path = pathlib.Path('./RunIII_2022_beta30cm')

posfile = path / 'MADX' / 'all_optics_B1.tfs'

aperfiles = list(path.glob('Results/2022_b30_K2_B1H_s*/aperture_losses.dat'))

collfiles = list(path.glob('Results/2022_b30_K2_B1H_s*/coll_summary.dat'))

ThisLM.load_data_SixTrack_K2(

aperture_losses=aperfiles,

coll_summary=collfiles,

positions=posfile,

cycle_aperture_from='tcp.c6l7.b1'

)

The files do not need to be compressed nor combined; in the above example aperfiles and collfiles are just lists of the output files of individual runs without any post-processing. If we started tracking at the jaw of the primary collimator (as is usually the case in collimation simulations), the position of the aperture losses will be given with respect to the position of that primary collimator. The option cycle_aperture_from can then be used to specify the element at which tracking started to correct for thid effect.

Similarily, the option cycle_positions_from can be used to specify what element inside the twiss file should be used as the reference for s=0. Typically this is already correct, but if for whatever reason the MAD-X lattice starts at, say, IP3, this can be shuffled back by setting cycle_positions_from='IP1'. Alternatively, this option can be used to reshuffle the lattice on purpose; i.e. setting cycle_positions_from='IP5' will produce a loss map that starts at IP5 and has IP1 in the middle.

There are three more options that are important:

backwards=False: if this isTrue, the positions data is reversed (which needs to be done in case of beam 2)interpolation=0.1: the interpolation resolution used in SixTrack (in meters)append=False: if this isTrue, the data will be appended to a previous load (for instance to generate a loss map that shows both beams)

Importing data from FLUKA simulations

Importing data from FLUKA simulations is very similar to the K2 case. The tool expects the aperture_losses and fort.208 files, a twiss file containing (at least) the positions of the collimators, and a collgaps file to link the collimator IDs to their names:

import pathlib

path = pathlib.Path('./RunIII_2022_beta30cm')

collgaps = path / 'Input' / 'collgaps.dat'

posfile = path / 'MADX' / 'all_optics_B1.tfs'

aperfiles = list(path.glob('Results/2022_b30_FLUKA_B1H_s*/aperture_losses.dat'))

fort208files = list(path.glob('Results/2022_b30_FLUKA_B1H_s*/fort.208'))

ThisLM.load_data_SixTrack_FLUKA(

aperture_losses=aperfiles,

fort208=fort208files,

positions=posfile,

collgaps=collgaps,

cycle_aperture_from='tcp.a.b1'

)

All other options to the command are the same as in the K2 case above.

Constructing a MeasuredLossMap from BLM data on NXCALS

N.B This section is only to retrieve a measured loss map, and requires some extra steps. If you just want to load a loss map, see later, and to plot it, see the next section.

Connecting to NXCALS

To be able to retrieve NXCALS data, you need access to NXCALS, and you need to start a SWAN session with the NXCALS and Spark libraries enabled. For this, choose "NXCals and Spark 3" from the software stack in the loading screen:

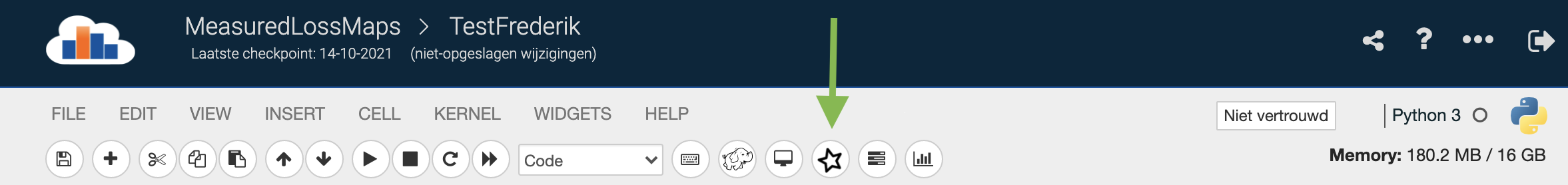

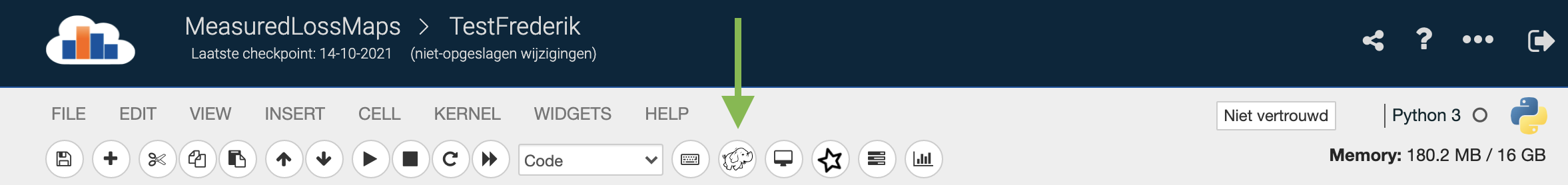

Next, inside the jupyter notebook, click the star to connect to the Spark cluster:

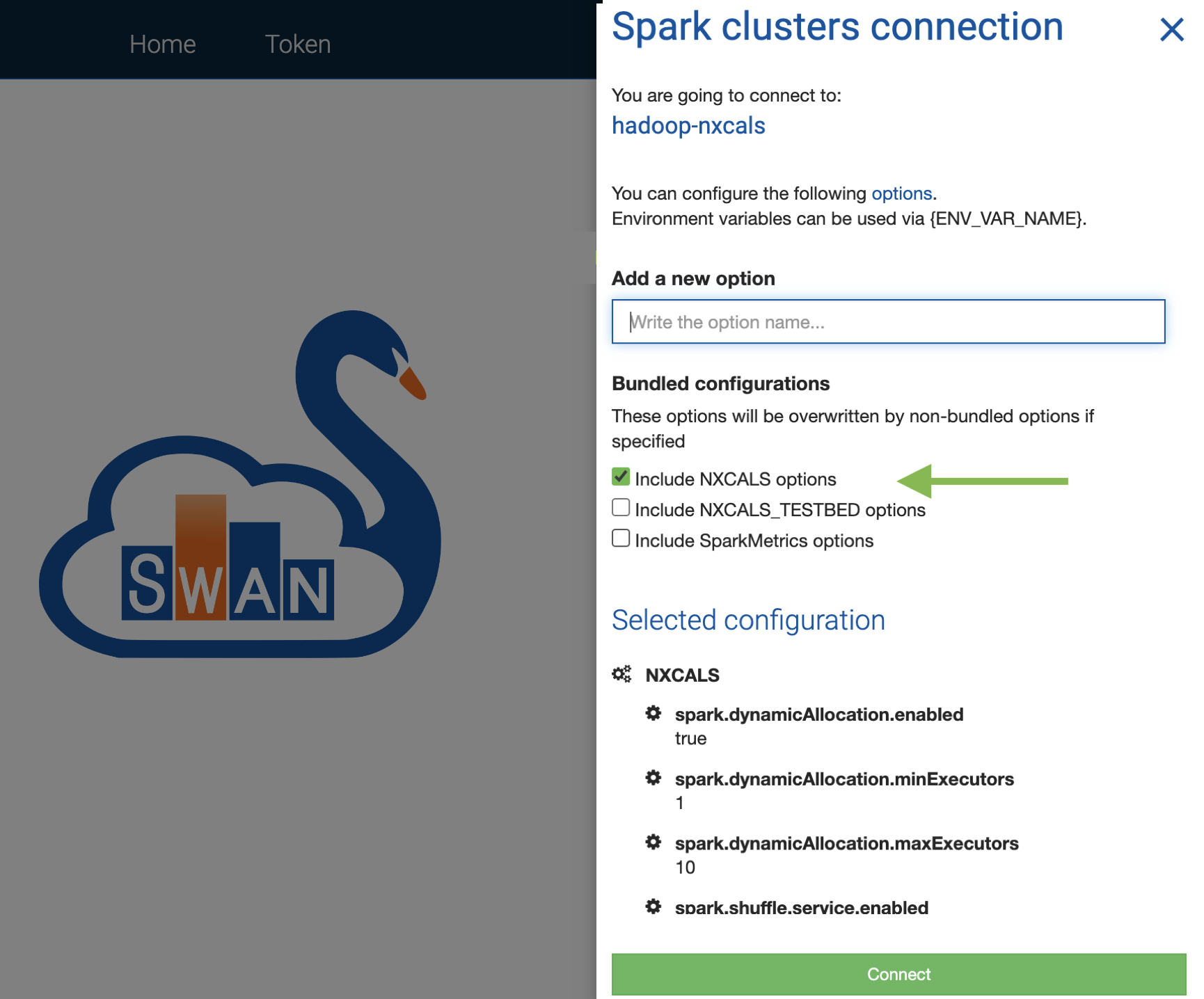

It will ask for your Kerberos password, and then give a list with options. Make sure to choose the NXCALS options:

and connect to Spark; this might take a while. After about a minute you should get a message saying you are connected. The notebook now contains a variable spark which represents the spark session you're connected to. This variable needs to be passed to any function calling NXCALS.

Importing measured data

To create a loss map from measured data, we first contstruct a MeasuredLossMap object similar to the simulated case:

import lossmaps as lm

ThisLM = lm.MeasuredLossMap(timestamp='2021-10-26 21:45:05', lmtype=lm.LMType.B1H)

There are two variables that are required at the initialisation of the MeasuredLossMap object: lmtype (similar to the SimulatedLossMap above), and timestamp. The latter represents the start of data taking (and also serves as the identification of the loss map). The amount of BLM data retrieved from NXCALS is defined by a time interval, from timestamp to timestamp + duration (where duration defaults to 30s). The background data is assumed to be inside the same time interval. If that is not the case, the start of background data can be specified with tback_start. In both cases, the number of background aquisitions can be specified with bckgr_aq which defaults to 10s.

Loading in the data from NXCALS is straightforward:

ThisLM.load_data_nxcals(spark)

where spark is the variable instantiated after connecting to Spark. An optional argument is blm_integration_time which allows one to choose the exact variable on NXCALS to be loaded in.

Old style lossmaps (before 2020)

When retrieving an old lossmap, the convention for the timstamp was slightly different. The current convention follows that of the OP pylossmaps tool: the timestamp refers to the start of the complete time window of the loss map (including both the background data and the loss map itself). Previously, the timestamp was referring to the moment of the peak losses, and the background was specified manually. This can be mimicked by shifting the timestamp with a few seconds (to not start on the peak) and manually give the timestamp for the background with tback_start:

ionLM_test = lm.MeasuredLossMap(timestamp='2018-11-08 17:33:49',

duration=6,

tback_start='2018-11-08 17:33:37',

bckgr_aq=10,

machine=lm.Machine.LHC,

lmtype=lm.LMType.B1H,

particle=lm.Particle.LEAD_ION,

energy=6370)

Plotting a LossMap

Plotting a loss map is straightforward:

lm.plot_lossmap(ThisLM)

norm=None: the normalisation to be used; options are"total","coll_max","max", and"none"for any loss map, and additionally"total_per_time","coll_max_per_time", and"max_per_time"for measured loss mapstimestamp=None: the timestamp to be used for the plot (only for measured loss maps); if no timestamp is specified, the one with the maximum loss will be usedbackground_std=0: the amount of background subtraction to perform (in sigma)layout=lhc_lattice_file: the twiss file with the layoutlabel_fake_spikes=True: whether to mark suspected fake spikes or notfont = None: a custom font if wantedfig_size = (20, 12): the size of the figurezoom = True: whether or not to add a zoom plot on the collimation insertionplot_range = (None, None): the plot range; if None is specified, the full range ofThisLM.machineis usedzoom_range = (None, None): the range of the zoom plot; if None is specified, the collimation insertion ofThisLM.machineis usedroi = None: the region of interest; if None is specified, the DS region ofThisLM.machineandThisLM.beamis usedgrid = True: whether or not to plot a gridcaption = True: whether or not to add an automatic captionoutfile = None: a filename to save the plot

If more fine control is needed, the matplotlib.pyplot environments figure and axes can be get as return values to use standard matplotlib manipulations upon (where axes is always a list, even if zoom==None):

fig, axes = lm.plot_lossmap(ThisLM)

axes[0].set_ylabel('Very cool data')

Finally, an interactive plot, that allows to change the normalisation on-the-fly, can be zoomed in, and has mouse-over hovers to show the element names, can be used as follows:

lm.plot_lossmap_interactive(ThisLM)

Exporting a LossMap to csv

As the losses data is stored in a pandas dataframe, one can just call the to_csv() function on it and on any accessor generated dataframe.

For instance, to store the raw data to a file raw_data.csv:

ThisLM.losses.to_csv('raw_data.csv')

And to store the data with the mean of the background subtracted to a file losses_no_backgr.csv:

ThisLM.losses.lossmap.inefficiency().to_csv('losses_no_backgr.csv')

Accessing Existing LossMaps on HDFS

WIP

Data fields

Every LossMap object (SimulatedLossMap or MeasuredLossMap) has the following fields (that can be set at initialisation or manually afterwards):

lmtype: options areLMType.B1H,LMType.B1V,LMType.B2H,LMType.B2V,LMType.DPpos,LMType.Dpneg, orLMType.OTHERmachine: options areMachine.LHC,Machine.HLLHC,Machine.SPS,Machine.FCCEE, andMachine.FCCHHparticle: options areParticle.PROTON,Particle.LEAD_ION, andParticle.LEAD_PSIenergy: in GeVbetastar: in cmbeam_process: options areBeamProcess.INJECTION,BeamProcess.START_OF_RAMP,BeamProcess.RAMP,BeamProcess.FLAT_TOP,BeamProcess.SQUEEZE,BeamProcess.STABLE_BEAMS_XRP_OUT, andBeamProcess.STABLE_BEAMSdescription: any string giving additional information about the loss map

Additionally, the following fields can only be set if lmtype == LMType.OTHER (otherwise they are set automatically):

beam: options areBeam.BEAM1,Beam.BEAM2, andBeam.BOTHplane: options arePlane.HORIZONTAL,Plane.VERTICAL, andPlane.BOTHrfsweep: options areRFsweep.POS,RFsweep.NEG, andRFsweep.NONE

Every MeasuredLossMap has the following additional fields (that can be set at initialisation or manually afterwards):

timestamp: the timestamp representing the loss map; typically this is the start of data takingduration=30: the time window (in seconds) under considerationtback_start=timestamp: the start of background data; typically this is the same astimestamp, but if the background data is outside the time window (e.g. when it was taken some time earlier) it can be specified manuallybckgr_aq=10: the number of background acquisitions (in seconds)BLM_integration_time: the NXCALS variable used (but can only be set when loading the data); the options areBLMintegrated.RS_40us,BLMintegrated.RS_80us,BLMintegrated.RS_320us,BLMintegrated.RS_640us,BLMintegrated.RS_2ms56,BLMintegrated.RS_10ms,BLMintegrated.RS_10ms24,BLMintegrated.RS_81ms92,BLMintegrated.RS_655m36,BLMintegrated.RS_1s31072(default),BLMintegrated.RS_5s24288,BLMintegrated.RS_20s97152, andBLMintegrated.RS_83s88608

When the data is loaded in (both for measured and simulated data), the following fields are available:

losses: apandasdataframe containing all (un-normalised) losses; thelosses_typecolumn is anEnumwith optionsLosses.COLL,Losses.COLD,Losses.WARM,Losses.XRP, andLosses.UNKNOWNmax_loss: the maximum loss on the collimatorst_max_loss: the timestamp of the maximum loss on the collimators (only forMeasuredLossMaps)DS_inefficiency: the maximum inefficiency over the dispersion suppressor region

Additionally, after loading the data for a SimulatedLossMap it will store the content of the different files as pandas dataframes, with the positions already shifted and reflected:

positions: the twiss file containing the positions of the collimatorscollgaps: the collgaps file (if the data was created with FLUKA)coll_summary: the mergedcoll_summaryfiles; if FLUKA, this is created from the fort.208 filesaperture_losses: the mergedaperture_lossesfilesaperture_binned: the aperture losses, binned to the interpolation resolution

The losses dataframe can be quite large; for quick calculations one can use a dedicated custom accessor called lossmap. To use any of the accessor functions, one has to prepend the function with .lossmap. and add this to the dataframe. E.g.

ThisLM.losses.lossmap.total_loss

total_loss: the sum of all lossesmax_loss: the peak losses (irrespectively of type)max_loss_per_type: the peak loss of each losses typedata_background_sub(background_std=0): the losses data after background subtractioninefficiency(norm="coll_max", background_std=0): the inefficiency, i.e. the losses data after background subtraction and normalisation; possible normalisations are"total","coll_max","max", and"none"for any loss map, and additionally"total_per_time","coll_max_per_time", and"max_per_time"for measured loss mapsinefficiency_by_type(norm="coll_max", background_std=0): the inefficiency sorted by losses typemax_inefficiency(roi, norm="coll_max", background_std=0): the maximum inefficiency; only aperture losses are considered, and only over a region of interest (roi = [s_min,s_max]) such as the DS regionfake_spikes(norm="coll_max", background_std=0, threshold=1e-4, ratio=2): a list of suspected fake spikes

The following accessor variables can only be used on a MeasuredLossMap:

timestamps: a list of available timestampstotal_loss_per_time: the total loss calculated for each timestampmax_loss_per_time: the peak loss calculated for each timestampt_max_loss: the timestamp of the overall maximum lossmax_loss_per_time_per_type: the peak loss calculated for each timestamp and for each losses typet_max_loss_per_type: the timestamp of the maximum loss for each losses type

Work in Progress

- Centralised database on HDFS containing plently of loss maps performed by the team, easily searchable

- Integration with CollDB.yaml etc

- Optimisation of speed (NXCALS loading speed, and plotting speed)

Maintainers

Andrey Abramov and Frederik F. Van der Veken

Manual written by Frederik F. Van der Veken, 20th March 2022